GenericTileProcessor¶

- MacroModule¶

genre

author

package

definition

see also

AutoProposeTileProcessorProperties,TensorFlowServingTileProcessor,RemoteTritonTileProcessor,LoadYAML,ONNXTileProcessorkeywords

RedLeaf,machine,deep,learning,tile,classif,predict,regression,provide,Generic,TileClassifier,GTP,roam,process,tensorflow,infer,model,onnx,triton

Purpose¶

Provides a generic/roaming tile processor (i.e. model inference provider) based on a model name, version and a given inference configuration. Thus, the model may be run locally or remotely, depending on the given configuration and the availability of the configured inference services.

Using this generic module is often preferable to using a fixed inference provider (e.g. TensorFlowServingTileProcessor or ONNXTileProcessor) as it allows you to switch the inference infrastructure for your entire application e.g. when switching from internal to external deployment via the configuration feature.

Depending on the configuration, you can for example:

Execute an ONNX model directly (in-process, using a local GPU or CPU)

Connect to a TensorFlow Serving (TFS) or NVidia Triton inference service, for instance running on a server with a powerful GPU

Given model ID, version and (YAML) configuration file, the module will try all configured inference providers one by one until one succeeds.

For additionally supported inference providers and parameters, see the local macros in the internal networks.

Usage¶

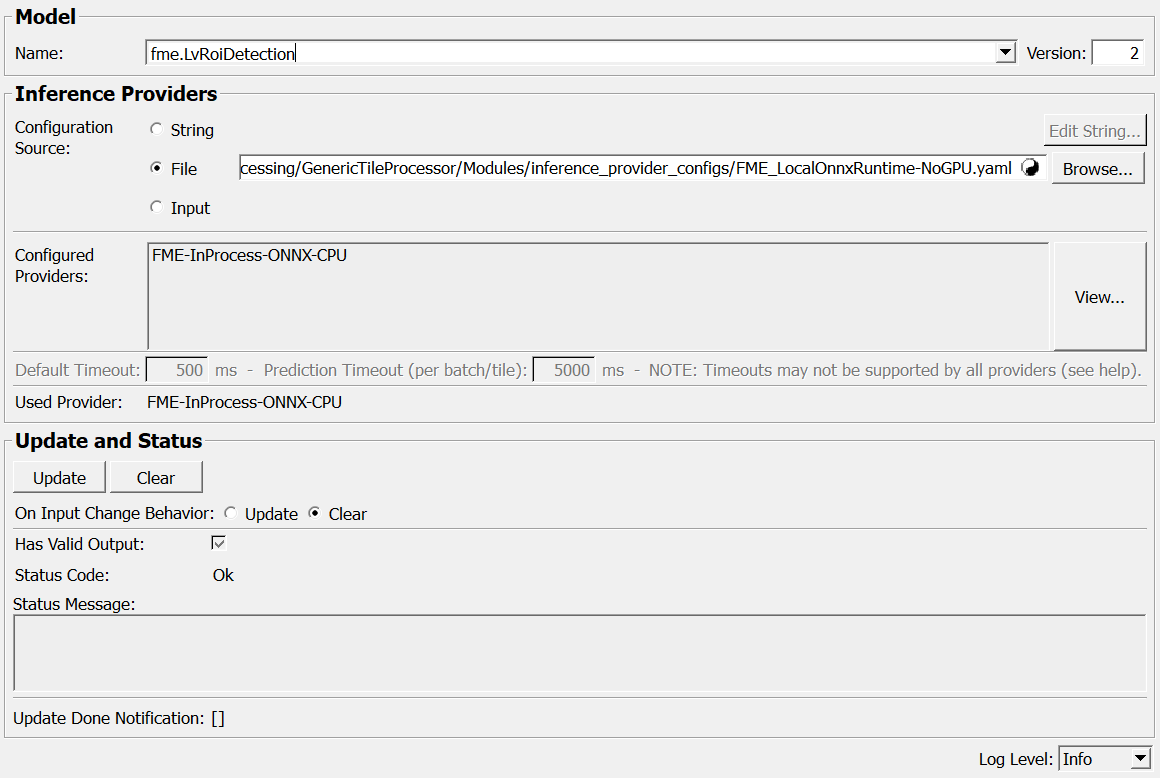

Select a model name/ID at Name, a version (a positive integer) at Version and an inference configuration.

The exact model name/ID format depends on how you organize your model repositories.

For local OnnxRuntime inference, a repository directory structure of

<model name 1>/

<version number>/

model.onnx

e.g.

heart_segmentation/

1/

model.onnx

2/

model.onnx

liver_tumor_classification/

1/

model.onnx

is assumed (so the model name/ID must be filename-compatible).

For TensorFlowServing and Triton, model IDs/names can be specified in the corresponding model configurations. If you want to use GenericTileProcessor, make sure to use the same model IDs for the same models.

The inference configuration defines which inference providers (basically ONNXTileProcessor, RemoteTritonTileProcessor and TensorFlowServingTileProcessor) may be used and in which order they will be tried out. Check the Configuration<br>Source documentation for details.

To start the inference provider selection and enable the “winner’”, press Update.

Details¶

FME-internal users may check out https://www.fme.lan/x/UZziAw on the FME-internal resource variables frequently used in such configurations, and how to set the defaults automatically via SetFmeInternalResourceVariables.

Tips¶

Usage in Applications¶

Using the GenericTileProcessor (GTP) in applications can make a lot of sense as one usually cannot foresee in advance how the inference infrastructure is set up at the deployment site. There are several ways to use the GTP to make your inference infrastructure configurable after installation, e.g.:

Using a configuration file from a hardcoded location: When you set all GTP modules used to use that file, you have only to adapt the content of that single file.

Using MDL variables in your configuration, and adapting the deployed application’s

mevislab.prefsUsing a configuration file from a location that can be configured via MDL variables: You still set all GTP modules to a single file location, but you keep that file location variable using an MDL variable you can adapt via the

mevislab.prefsin the deployed application.

TIP: If you (understandably) don’t like setting all GTP modules manually to the same configuration file (which can be error-prone), you may want to load the configuration just once via a single LoadYAML module in the application’s toplevel network (using any of the above mentioned approaches to keep it configurable on deployment) and provide the loaded configuration to the internal components via field connections (and setting Configuration<br>Source to “Input” on all GTP modules receiving the config)

Windows¶

Default Panel¶

ParsedConfig¶

StringConfig¶

Input Fields¶

inInferenceProviderConfig¶

- name: inInferenceProviderConfig, type: MLBase¶

When

Configuration<br>Sourceis set to “Input”, you can provide the inference configuration as a python dictionary via this input field.

Output Fields¶

outTileProcessor¶

- name: outTileProcessor, type: MLBase, deprecated name: outTileClassifier¶

Holds the actual tile processor instance that can be connected to modules such as

ApplyTileProcessorPageWiseto run inference on image data.

Parameter Fields¶

Field Index¶

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

Visible Fields¶

Name¶

- name: inModelName, type: String¶

Model name, aka. model ID, e.g. “fme.LvRoiDetection”

Dots “.” are used as hierarchy separators, so for file-based model providers, they will usually be mapped to directory separators (/ or ).

Version¶

- name: inModelVersion, type: Integer, default: 1, minimum: 1¶

Model version number, must be a positive integer.

Default Timeout¶

- name: inDefaultTimeout_ms, type: Integer, default: 500, deprecated name: inDefaultTimeout\_ms\_DeprecatedForTesting¶

Timeout in ms for actions such as connecting/disconnecting to an inference provider. Usually only supported by out-of-process inference providers.

May be overridden by provider-specific settings in the configuration file (check the console log on update).

Prediction Timeout (per batch/tile)¶

- name: inPredictionTimeout_ms, type: Integer, default: 5000¶

Timeout in ms for individual tile (aka. batch) requests. Usually only supported by out-of-process inference providers.

May be overridden by provider-specific settings in the configuration file (check the console log on update).

Disable batch-level multithreading¶

- name: inDisableBatchLevelMultithreading, type: Bool, default: FALSE¶

Prevent attempting to process multiple batches in parallel, e.g. to save resources.

If unset and supported by the used inference provider, multi-batch inference operations (e.g. using

ProcessTiles) may use up to the number of threads specified in the MeVisLab preferences as “Maximum Threads Used for Image Processing”, which can speed up inference substantially especially for smaller batches. Otherwise, all batches will be processed sequentially.May be overridden by provider-specific settings in the configuration file (check the console log on update).

Log Level¶

- name: consoleLogLevel, type: Enum, default: INFO¶

Minimum level for console logging by GenericTileProcessor itself. Concrete inference tile providers may not support log levels and still log as they please.

May be overridden by provider-specific settings in the configuration file.

Values:

Title |

Name |

|---|---|

Debug |

DEBUG |

Info |

INFO |

Warning |

WARNING |

Error |

ERROR |

Critical |

CRITICAL |

Configuration<br>Source¶

- name: inConfigSource, type: Enum, default: File¶

The module allows to provide the list of inference providers (aka “configuration”) via a YAML string, a YAML file, or a python dictionary.

A configuration is a dictionary with the following structure:

{ "Name-Of-First-Provider": { "module": <instance name of the module in the internal network, should be a lazily loaded local macro>, "parameters": <optional dictionary containing pairs of field name and field value that can be used to parameterize the module> }, "Name-Of-Second-Provider": { ... }, ... }

See

../GenericTileProcessor_ExampleProviderConfig.yamlas an example (note that the referenced resources may not be available to you).

Values:

Title |

Name |

Description |

|---|---|---|

String |

String |

Provide the configuration as a YAML string, e.g. FME-Remote-TFS:

module: ProvideTensorFlowServingTileProcessor

parameters:

inServerUrl: $(FME_TFSERVING_URL)

As you can see, you can use MDL variables in the configuration. |

File |

File |

Select a YAML file with contents as described for the “String” mode. |

Input |

Input |

Provide a dictionary via the field |

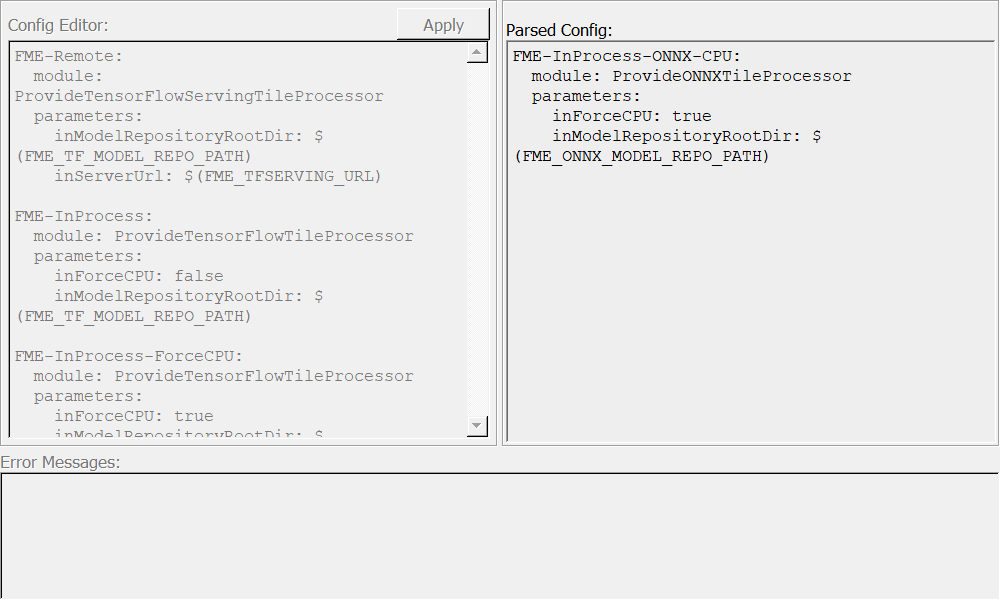

Config Editor¶

- name: inConfigString, type: String, default: FME-Remote-TFS:, module: ProvideTensorFlowServingTileProcessor, parameters:, inServerUrl: $(FME_TFSERVING_URL), , FME-InProcess-ONNX:, module: ProvideONNXTileProcessor, parameters:, inForceCPU: false, inModelRepositoryRootDir: $(FME_ONNX_MODEL_REPO_PATH),¶

When

Configuration<br>Sourceis set to “String”, you can press the “Edit…” button to pop up a simple editor for the YAML string.

In Config Filename¶

- name: inConfigFilename, type: String, default: $(MLAB_DEFAULT_INFERENCE_CONFIG)¶

When

Configuration<br>Sourceis set to “File”, you can use this field to provide a YAML file containing the inference configuration.If you have a configuration you would like to use by default, it is recommended to leave this field at it’s default (

$(MLAB_DEFAULT_INFERENCE_CONFIG)) and set theMLAB_DEFAULT_INFERENCE_CONFIGvariable to your configuration file location in themevislab.prefsor an environment variable.See

../GenericTileProcessor_ExampleProviderConfig.yamlfor an example configuration file (note that the referenced resources may not be available to you).

Require valid file signature in application mode¶

- name: inCheckConfigFileSignatureForCurrentLicense, type: Bool, default: FALSE¶

If enabled, the module will expect the config file to be signed by MeVisLab (it may also be encrypted) for the current license.

In particular, when used in a standalone application with a normal user (i.e. non-developer) license, the file must be signed for application usage, or it will be rejected.

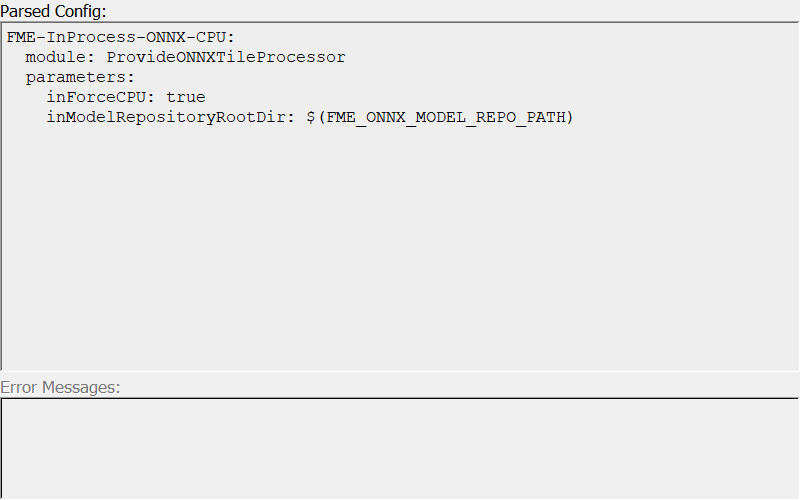

Parsed Config¶

- name: outUsedConfigString, type: String, persistent: no¶

The currently active configuration, dumped into a YAML string to be checked by the user. If empty, check

Configuration<br>Source.

Used Provider¶

Configured<br>Providers¶

- name: outConfiguredProviders, type: String, persistent: no¶

(Ordered) List of providers found in the current configuration

Update¶

- name: update, type: Trigger¶

Initiates update of all output field values.

Clear¶

- name: clear, type: Trigger¶

Clears all output field values to a clean initial state.

On Input Change Behavior¶

- name: onInputChangeBehavior, type: Enum, default: Clear, deprecated name: shouldAutoUpdate,shouldUpdateAutomatically¶

Declares how the module should react if a value of an input field changes.

Values:

Title |

Name |

Deprecated Name |

|---|---|---|

Update |

Update |

TRUE |

Clear |

Clear |

FALSE |

[]¶

- name: updateDone, type: Trigger, persistent: no¶

Notifies that an update was performed (Check status interface fields to identify success or failure).

Has Valid Output¶

- name: hasValidOutput, type: Bool, persistent: no¶

Indicates validity of output field values (success of computation).

Status Code¶

- name: statusCode, type: Enum, persistent: no¶

Reflects module’s status (successful or failed computations) as one of some predefined enumeration values.

Values:

Title |

Name |

|---|---|

Ok |

Ok |

Invalid input object |

Invalid input object |

Invalid input parameter |

Invalid input parameter |

Internal error |

Internal error |

Status Message¶

- name: statusMessage, type: String, persistent: no¶

Gives additional, detailed information about status code as human-readable message.