ONNXTileProcessor¶

Purpose¶

Runs inference on a ONNX neural network model file via the ONNX Runtime C++ API for voxel processing.

Usage¶

Select an ONNX model file and press update. Then connect to a ApplyTileProcessorPageWise or ApplyTileProcessorPageWise module (see example networks) and connect input images with the required format. Check the parameter info at outTileProcessor for information on the input and output tensors.

Details¶

Model Config / Meta Data Support¶

In some situations, it may be desired to store and load additional parameters with the model. This is supported to some degree through the ONNX ModelMetadata one can provide with each model.

You may provide arbitrary key/value meta information through the following format:

parameters: {

key: "some custom parameter"

value: {

string_value: "some custom string value"

}

}

parameters: {

key: "some custom complex parameter provided via JSON"

value: {

string_value: "{ \"some string key\": \"some string value\", \"some int key\": 42 }"

}

}

Notice the lower example providing a JSON-compatible string (requiring escaping of the quotes (“)) to encode mode complex data structures.

The key/value pair will then be available in the ‘parameter info’ at the module output, which you can inspect in multiple ways, e.g. through the output inspector or with the ParameterInfoInspector, or programmatically via ctx.field( "outTileProcessor" ).object().getParameterInfo() as a python dictionary. If a JSON-compatible string is provided (lower example), the JSON will be parsed and provided as a dictionary.

Inference Tile Properties¶

A special case of such properties stored as JSON-like strings are the inference_tile_properties, which can be used to propose those TileProcessorProperties required for page-wise processing with ProcessTiles or ApplyTileProcessorPageWise (and also to some degree non-page-wise processing with ApplyTileProcessor).

If a model comes with correct inference tile properties, it can be immediately used for page-wise processing via ProcessTiles, and you will not have to use/adapt a SetTileProcessorProperties or SetTileProcessorPropertiesFromJson for manual adjustment.

Note that inference tile properties are always expected to be stored as a json-compatible string, i.e. any JSON parser problem will result in an error.

This is a working example for adding inference_tile_properties to an existing ONNX model:

import onnx

model = onnx.load( "model.onnx" )

new_meta = model.metadata_props.add()

new_meta.key = "inference_tile_properties"

new_meta.value = "{ \"inputs\": { \"input\": { \"dataType\": \"float32\", \"dimensions\": \"X, Y, CHANNEL1, BATCH\", \"externalDimensionForChannel1\": \"C\", \"externalDimensionForChannel2\": \"U\", \"fillMode\": \"Reflect\", \"fillValue\": 0.0, \"padding\": [8, 8, 0, 0, 0, 0] } }, \"outputs\": { \"scores\": { \"dataType\": \"float32\", \"referenceInput\": \"input\", \"stride\": [1.0, 1.0, 1.0, 1.0, 1.0, 1.0], \"tileSize\": [44, 44, 2, 1, 1, 1], \"tileSizeMinimum\": [2, 2, 2, 1, 1, 1], \"tileSizeOffset\": [2, 2, 0, 1, 0, 0] } } }"

onnx.save_model( model, "model_with_inference_tile_properties_meta_data.onnx" )

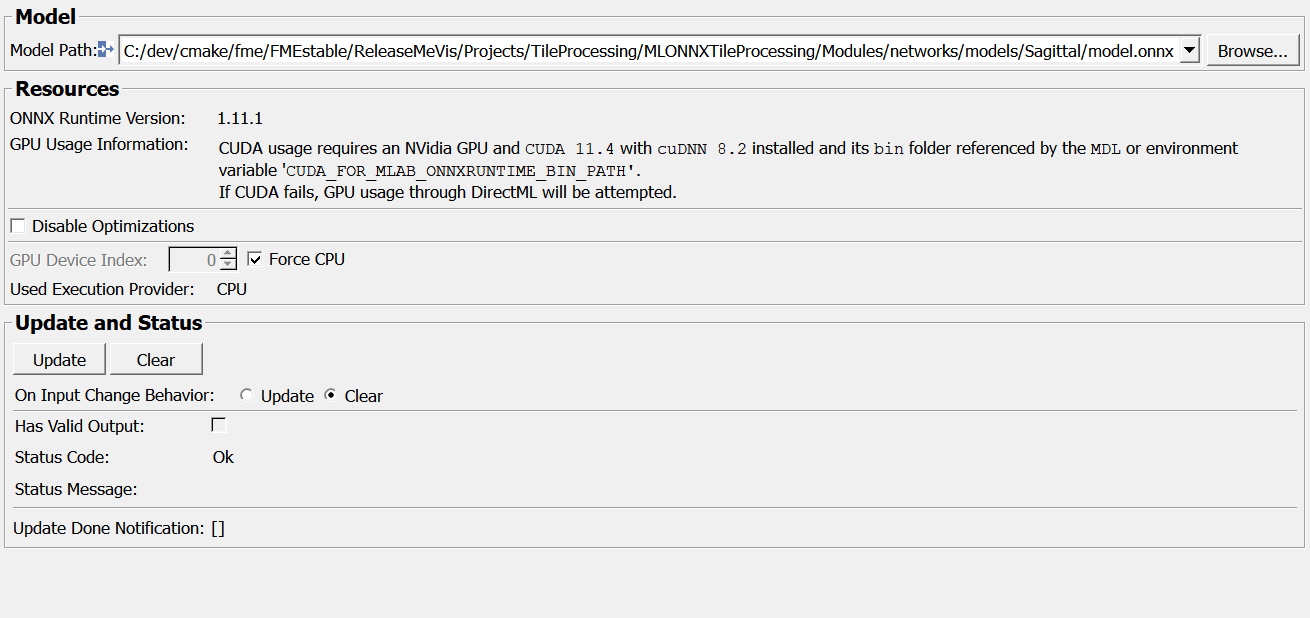

GPU Support¶

You can configure GPU usage through Force CPU and GPU Device Index. Check Used Execution Provider on which device is actually used. Depending on your operating system and setup, CPU, CUDA or DirectML may be selected (Priority: CUDA > DirectML > CPU).

On Update, the module may log additional info on the execution provider selection to the console. If no GPU is shown although you have one, check the console and the GUI under GPU Usage Hints (if within the Fraunhofer MEVIS intranet, you may also refer to this page for details).

Multithreading Support¶

Different kinds of multithreading are possible.

Batch-Level Multithreading

On CPU, multithreading on batch level is enabled by default because it is known to work reliably and generally speeds up the process, sometimes substantially depending on batch size as well as model and hardware resources. It can be disabled through Disable batch\-level multithreading if you need to save CPU or memory resource.

- With the CUDA GPU provider, batch-level multithreading is disabled by default because of these known issues:

With any ONNX optimizations enabled (even BASIC), result differences and even crashes could be observed

Speedup may occur for small batches, but for larger ones, the GPU (memory) easily gets overloaded resulting in a speed-down of factor 10 or more

If you know what you are doing, you can set the environment variable ONNXTILEPROCESSOR_ALLOW_MULTI_THREADING_CUDA to enable it for CUDA as well.

Setting Disable batch\-level multithreading will disable the feature for any inference provider, overriding environment variable settings.

ONNX Runtime Internal Multithreading

It is possible to set how many CPU threads can be used by a ONNX Runtime (ORT) session. As the ORT version in MeVisLab is built with OpenMP, use the OpenMP environment variable (OMP_NUM_THREADS, see the Microsoft documentation) to control the “intra op num threads”.

For more info see the ONNX Runtime performance tuning guide.

NOTE: The above environment variables need to be set before ONNXTileProcessor is instantiated. These options are effective for all subsequent sessions created within the same process.

Model Encryption Support¶

On top of plain ONNX model files (.onnx), the module can also read encrypted ONNX model files through the MLBinaryEncryption API.

The decryption algorithm (if any) is detected by file extension. For the decryption to work, the decryptor and key DLLs need to be already loaded. In a SDK setup, this is usually no problem.

When building standalone applications, however, MeVisLab has now way of auto-detecting the dependencies on decryption algorithm and key by analyzing your application network. Hence, you need to manually ensure that these are included into your installer. Assuming the encryption algorithm is BinaryEncryption_AES256_GCM and the key library is named ExampleKeyProvider, these are two alternatives to achieve this:

Adding the deployment helper modules (e.g.

BinaryEncryption_AES256_GCMandExampleKeyProvider, assuming you have those) to you application networkAdding

dll = MLBinaryEncryption_AES256_GCManddll = MLExampleKeyProvider(or, if available, the deployment helper modules as above viamodule = ...) to your application macro’sDeploymentsection

Models can be encrypted using the module FileEncrypter, if you have this available in your SDK.

Bool Datatype Support¶

As MeVisLab does not support bool images or subimages (at least not in the same way it supports the other data types), bool is not a supported data type in the TileProcessing framework. However, ONNXTileProcessor supports input tensor of type bool by instead requesting uint8 and casting such an input tensor to bool before providing it to ONNXRuntime for inference.

Windows¶

Default Panel¶

Output Fields¶

outTileProcessor¶

- name: outTileProcessor, type: ONNXTileProcessor(MLBase), deprecated name: outModelConnector,outTileClassifier¶

Provides a C++ TileProcessor object that talks to the ONNXRuntime backend. Usually to be connected to

ProcessTilesorApplyTileProcessor.

Parameter Fields¶

Field Index¶

|

|

|

|

|

|

|

||

|

||

|

|

|

|

|

|

|

|

|

|

|

Visible Fields¶

Update¶

- name: update, type: Trigger¶

Initiates update of all output field values.

Clear¶

- name: clear, type: Trigger¶

Clears all output field values to a clean initial state.

On Input Change Behavior¶

- name: onInputChangeBehavior, type: Enum, default: Clear, deprecated name: shouldAutoUpdate,shouldUpdateAutomatically¶

Declares how the module should react if a value of an input field changes.

Values:

Title |

Name |

Deprecated Name |

|---|---|---|

Update |

Update |

TRUE |

Clear |

Clear |

FALSE |

Status Code¶

- name: statusCode, type: Enum, persistent: no¶

Reflects module’s status (successful or failed computations) as one of some predefined enumeration values.

Values:

Title |

Name |

|---|---|

Ok |

Ok |

Invalid input object |

Invalid input object |

Invalid input parameter |

Invalid input parameter |

Internal error |

Internal error |

Status Message¶

- name: statusMessage, type: String, persistent: no¶

Gives additional, detailed information about status code as human-readable message.

Has Valid Output¶

- name: hasValidOutput, type: Bool, persistent: no¶

Indicates validity of output field values (success of computation).

[]¶

- name: updateDone, type: Trigger, persistent: no¶

Notifies that an update was performed (Check status interface fields to identify success or failure).

Force CPU¶

- name: inForceCPU, type: Bool, default: FALSE¶

Force using the CPU even if a GPU is present

GPU Device Index¶

Disable Graph Optimizations¶

- name: inDisableGraphOptimizations, type: Bool, default: FALSE, deprecated name: inDisableOptimizations¶

Disables all graph optimizations by ONNXRuntime. Necessary to use Dropout layers, which would otherwise be removed.

Disable batch-level multithreading¶

- name: inDisableBatchLevelMultithreading, type: Bool, default: FALSE, deprecated name: inDisableMultithreading¶

Prevent attempting to process multiple batches in parallel, e.g. to save memory or compute resources

If unset, multi-batch inference operations (e.g. using

ProcessTiles) may use up to the number of threads specified in the MeVisLab preferences as “Maximum Threads Used for Image Processing”, which can speed up inference substantially especially for smaller batches. Otherwise, all batches will be processed sequentially.

Used Execution Provider¶

ONNX Runtime Version¶

- name: outONNXRuntimeVersion, type: String, persistent: no¶

Shows the used ONNX Runtime version

Model Path¶

- name: inModelPath, type: String¶

Path to the ONNX model file (usually a ‘.onnx’ file).

Models encrypted using the

FileEncrypterare supported if they have the file extension ‘.enc’.