NNUNetInference¶

Purpose¶

Performs overlapping weighted tile-based inference for nnU-Net models analogous to the inference algorithm in the nnU-Net code base.

nnU-Net paper: https://www.nature.com/articles/s41592-020-01008-z

nnU-Net code base: https://github.com/MIC-DKFZ/nnUNet/tree/master/nnunetv2/inference

Usage¶

Connect the image and a TileProcessor providing the trained nnU-Net (e.g. using an ONNXTileProcessor or RemoteTritonTileProcessor). Set the parameters according to the plans.pkl or plans.json file of the trained nnU-Net.

If your model contains the necessary properties, you can use Setup From Model Metadata to set up the necessary configuration.

If you have a nnU-Net plans.json or plans.pkl file for your model, you can use the setupFromPlan() method of this module:

planPath = "/path/to/your/plans.json"

nnunetinference = ctx.module("NNUNetInference").object()

nnunetinference.setupFromPlan(planPath)

You can also use setupFromONNXTileProcessor() to automatically load the properties from the folder in which the ONNX model is saved:

onnxTileProcessor = ctx.module("ONNXTileProcessor")

nnunetinference = ctx.module("NNUNetInference").object()

nnunetinference.setupFromONNXTileProcessor(onnxTileProcessor)

Details¶

This module is about using a single U-Net trained by nnU-Net for inference, not about the full prediction including ensembling. The module applies the same preprocessing and inference with overlapping patches as nnUNet_predict would. The results are not exactly the same, mostly due to a different resampling that cannot easily be reproduced in MeVisLab, but typically they are very close (Dice > 0.97).

Windows¶

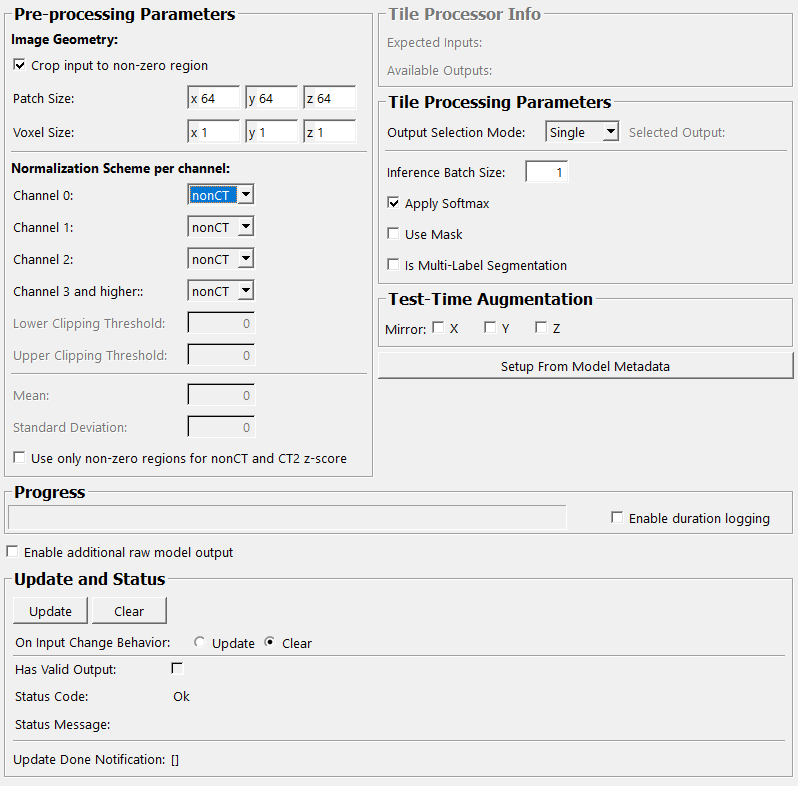

Default Panel¶

Input Fields¶

inImage¶

- name: inImage, type: Image, deprecated name: input0¶

Image to be segmented.

inTileProcessor¶

- name: inTileProcessor, type: TileProcessor/TileProcessorContainer(MLBase)¶

TileProcessor wrapping the nnU-Net, e.g.

ONNXTileProcessororRemoteTritonTileProcessor.

inMask¶

- name: inMask, type: Image¶

Optional input mask. Is used during post-processing to remove any segmented objects outside this mask.

Output Fields¶

outImage¶

- name: outImage, type: Image, deprecated name: output0¶

Inference result (one-hot encoded).

outputRawProbabilities¶

- name: outputRawProbabilities, type: Image¶

Optional output which provides the raw softmax output before binarization into discrete labels. Can be enabled with the field

Enable additional raw model output.

Parameter Fields¶

Field Index¶

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Visible Fields¶

Update¶

- name: update, type: Trigger¶

Initiates update of all output field values.

Clear¶

- name: clear, type: Trigger¶

Clears all output field values to a clean initial state.

On Input Change Behavior¶

- name: onInputChangeBehavior, type: Enum, default: Clear, deprecated name: shouldAutoUpdate,shouldUpdateAutomatically¶

Declares how the module should react if a value of an input field changes.

Values:

Title |

Name |

Deprecated Name |

|---|---|---|

Update |

Update |

TRUE |

Clear |

Clear |

FALSE |

[]¶

- name: updateDone, type: Trigger, persistent: no¶

Notifies that an update was performed (Check status interface fields to identify success or failure).

Has Valid Output¶

- name: hasValidOutput, type: Bool, persistent: no¶

Indicates validity of output field values (success of computation).

Status Code¶

- name: statusCode, type: Enum, persistent: no¶

Reflects module’s status (successful or failed computations) as one of some predefined enumeration values.

Values:

Title |

Name |

|---|---|

Ok |

Ok |

Invalid input object |

Invalid input object |

Invalid input parameter |

Invalid input parameter |

Internal error |

Internal error |

Status Message¶

- name: statusMessage, type: String, persistent: no¶

Gives additional, detailed information about status code as human-readable message.

Enable Duration Logging¶

- name: enableDurationLogging, type: Bool, default: FALSE, deprecated name: debug¶

Run module in debug mode. Provides additional logging output and timing measurements.

Patch Size¶

- name: inPatchSize, type: IntVector3, default: 64 64 64¶

Patch size used for inference.

Voxel Size¶

- name: inVoxelSize, type: Vector3, default: 1 1 1¶

Voxel size used for inference.

Expected Inputs¶

- name: availableInputs, type: String, persistent: no¶

Comma-separated list of all inputs declared by the current TileProcessor

Selected Output¶

- name: availableOutputs, type: String, persistent: no¶

Comma-separated list of all outputs declared by the current TileProcessor

Output Selection Mode¶

- name: inModelOutputSelectionMode, type: Enum, default: Single¶

Choose how the module decides which of the processor outputs to pick. If it has only one output, just leave “Single”.

Values:

Title |

Name |

|---|---|

Single |

Single |

Custom |

Custom |

Custom Output¶

- name: inCustomModelOutput, type: String¶

Name of the model output to pick in

Output Selection Mode‘Custom’

Channel 0¶

- name: inNormalizationSchemeC0, type: Enum, default: nonCT, deprecated name: inNormalizationScheme¶

Normalization scheme for channel 0, consisting of clipping and standardization ((value-mean)/std)

Values:

Title |

Name |

|---|---|

CT |

CT |

CT2 |

CT2 |

nonCT |

nonCT |

none |

none |

Channel 1¶

- name: inNormalizationSchemeC1, type: Enum, default: nonCT¶

Normalization scheme for channel 1

Values:

Title |

Name |

|---|---|

CT |

CT |

CT2 |

CT2 |

nonCT |

nonCT |

none |

none |

Channel 2¶

- name: inNormalizationSchemeC2, type: Enum, default: nonCT¶

Normalization scheme for channel 2

Values:

Title |

Name |

|---|---|

CT |

CT |

CT2 |

CT2 |

nonCT |

nonCT |

none |

none |

Channel 3 and higher:¶

- name: inNormalizationSchemeC3andHigher, type: Enum, default: nonCT, deprecated name: inNormalizationSchemeC3¶

Normalization scheme for channel 3 and all higher channels

Values:

Title |

Name |

|---|---|

CT |

CT |

CT2 |

CT2 |

nonCT |

nonCT |

none |

none |

Crop input to non-zero region¶

- name: inCropNonZeroRegions, type: Bool, default: TRUE¶

Crops the input to the image region which is unequal 0.

Use only non-zero regions for nonCT and CT2 z-score¶

- name: inUseNonZeroRegionForZScore, type: Bool, default: FALSE¶

If true, only non-zero voxels (AFTER normalizing image values regarding DICOM Rescale Intercept and Slope) are considered when computing mean and standard deviation for z scoring (in nonCT and CT2 z-score normalization mode).

Lower Clipping Threshold¶

- name: inLowerClippingThreshold, type: Double, default: 0¶

Lower clipping threshold (5th percentile of training data).

Upper Clipping Threshold¶

- name: inUpperClippingThreshold, type: Double, default: 0¶

Upper clipping threshold (95th percentile of training data).

Mean¶

- name: inMean, type: Double, default: 0¶

Mean used for standardization.

Standard Deviation¶

- name: inStandardDeviation, type: Double, default: 0¶

Standard deviation used for standardization.

Apply Softmax¶

- name: inApplySoftmax, type: Bool, default: TRUE¶

Apply softmax to neural network outputs. This is required, if the neural network does not include a softmax layer by itself.

Inference Batch Size¶

- name: inBatchSize, type: Integer, default: 1, minimum: 1¶

Apply neural network inference to

Inference Batch Sizepatches at once. This can potentially improve the running time of the inference, but requires more memory for the neural network inference.

Use Mask¶

- name: inUseMask, type: Bool, default: FALSE¶

Apply a mask to the inference result.

Is Multi-Label Segmentation¶

- name: inIsMultiLabelSegmentation, type: Bool, default: FALSE¶

Activate if your model has a multi-label output. I.e. if one voxel can have multiple labels at the same time instead of only one.

X¶

- name: inMirrorX, type: Bool, default: FALSE¶

Mirror X-axis for test time augmentation.

Y¶

- name: inMirrorY, type: Bool, default: FALSE¶

Mirror Y-axis for test time augmentation.

Z¶

- name: inMirrorZ, type: Bool, default: FALSE¶

Mirror Z-axis for test time augmentation.

Enable additional raw model output¶

- name: addRawModelOutput, type: Bool, default: FALSE¶

Adds the “raw” model outputs as additional output field

outputRawProbabilities. I.e. the softmax outputs before they have been binarized into discrete labels.

Setup From Model Metadata¶

- name: setupFromModelMetadata, type: Trigger¶

Sets the inference parameters from the connected model.